Samsung Electronics is set to begin mass production of the world’s first 6th-generation high-bandwidth memory (HBM4) next week, with shipments to start after the Lunar New Year holiday. The chips are destined for NVIDIA’s next-generation Vera Rubin AI accelerators, marking a major leap in memory performance for the generative AI era.

In the high-stakes race to power the world’s artificial intelligence, a critical bottleneck has always been memory—the speed at which data can be fed to hungry AI processors. Now, that bottleneck is about to widen significantly. Samsung, the South Korean tech giant, is taking the wraps off the next major evolution in memory technology, pushing the industry into a new performance tier.

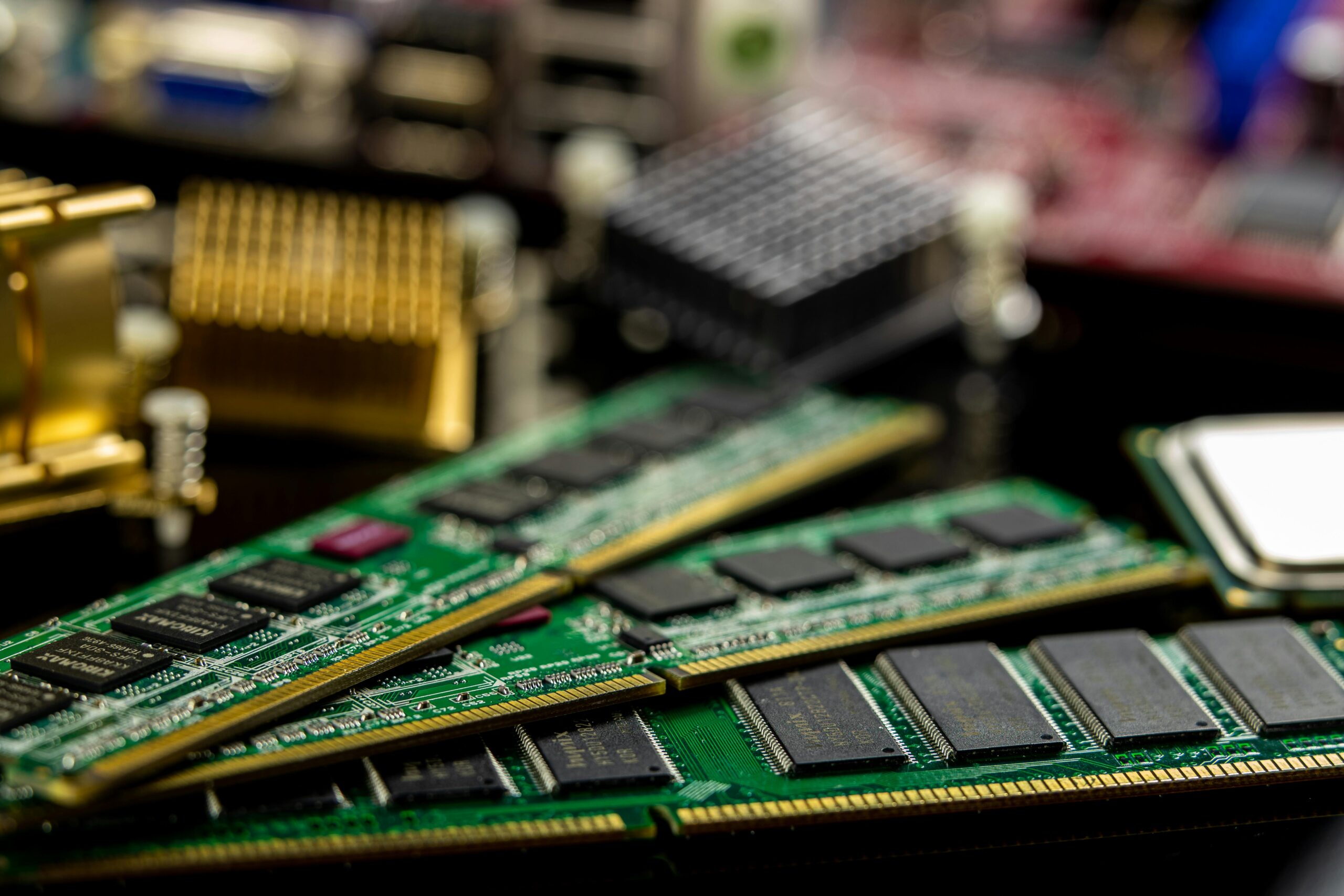

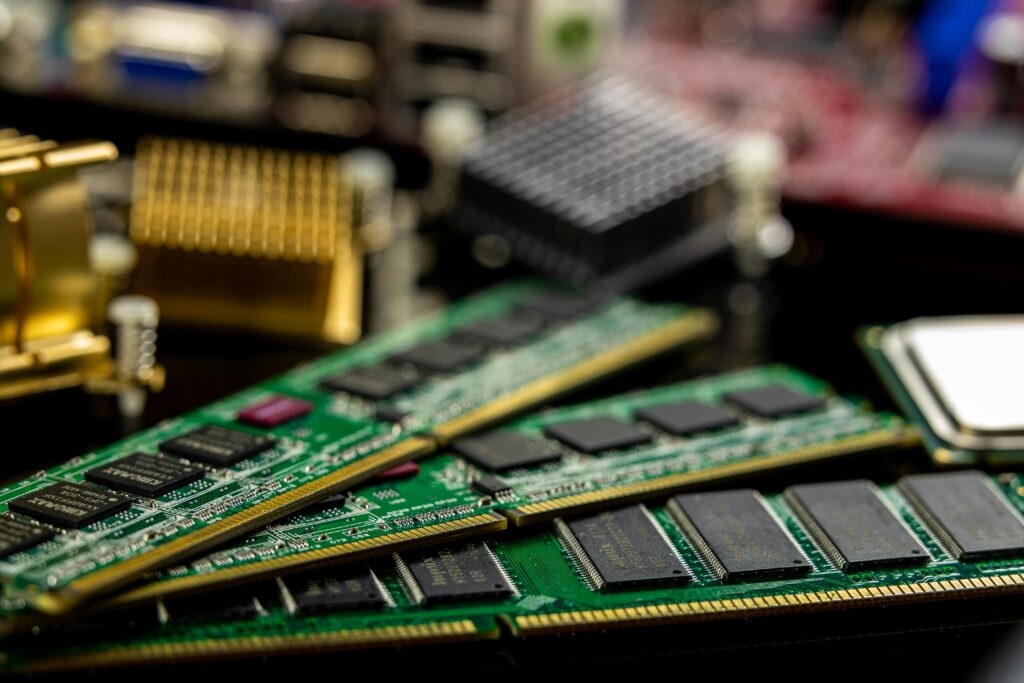

According to a report from Seoul-based Yonhap News, Samsung will kick off volume production of its HBM4 chips in the coming days, with the first shipments slated for right after the Lunar New Year. This isn’t just an incremental update. HBM4 represents the sixth generation of high-bandwidth memory, a stacking technology that vertically layers DRAM chips to achieve immense data transfer speeds, which are essential for training and running large AI models.

READ ALSO: https://modernmechanics24.com/post/legendary-stinger-missile-45-year/

HBM4 addresses the most pressing hardware limitation in advanced AI computing: memory bandwidth. As AI models grow exponentially in size and complexity, the existing HBM3 and HBM3E standards are being pushed to their limits. The new generation is designed to break through that ceiling, ensuring that NVIDIA’s powerful GPUs don’t sit idle waiting for data.

In practical terms, these chips will be integrated directly into NVIDIA’s upcoming Vera Rubin AI accelerator GPUs. They act as a super-highway for data, sitting physically close to the processor cores to dramatically reduce latency and increase the volume of information that can be processed simultaneously. This allows for faster training of sophisticated AI like large language models and more efficient inference in real-time applications.

The drive to develop and commercialize HBM4 has been led by Samsung’s memory division, one of the world’s foremost semiconductor manufacturing teams. Their engineers have successfully passed NVIDIA’s rigorous quality certification process, a critical gatekeeper for components destined for its flagship AI products.

WATCH ALSO: https://modernmechanics24.com/post/china-military-modern-exercise/

While a major technical achievement, the launch comes with intense pressure. The production timeline is exceptionally tight, finalized specifically to align with NVIDIA’s own launch schedule for the Vera Rubin platform. This leaves Samsung little room for error in ramping up yield rates, especially amid fierce competition from rivals like SK Hynix.

The broader value of this milestone extends beyond a single product launch. It reinforces the symbiotic relationship between cutting-edge memory and leading-edge AI processors. By securing this first major order, Samsung not only captures a key market but also helps enable the next wave of AI capabilities, from more accurate medical diagnostics to more powerful autonomous systems.

The report confirms that Samsung has already increased the volume of HBM4 samples for final module testing by customers, indicating that the transition from validation to full-scale production is well underway. With the global HBM market currently led by 5th-generation HBM3E chips, HBM4 is poised to become the new benchmark, defining performance for the next generation of data centers and AI research.

READ ALSO: https://modernmechanics24.com/post/nvidia-alpamayo-ai-for-self-driving-car/

For NVIDIA, securing a reliable, advanced memory supply is crucial for maintaining its dominance in the AI hardware space. For the industry, Samsung’s move signals that the infrastructure behind the AI revolution is continuing to advance at a breakneck pace, ensuring that the software of tomorrow will have the hardware it needs to run.